It’s no secret that OpenAI’s ChatGPT has some unbelievable functions — for example, the chatbot can write poetry that resembles Shakespearean sonnets or debug code for a pc program. Those skills are made imaginable by means of the huge machine-learning mannequin that ChatGPT is constructed upon. Researchers have discovered that after most of these items change into big enough, peculiar functions emerge.

However larger items additionally require extra money and time to coach. The learning procedure comes to appearing loads of billions of examples to a mannequin. Amassing such a lot information is an concerned procedure in itself. Then come the financial and environmental prices of operating many tough computer systems for days or even weeks to coach a mannequin that can have billions of parameters.

“It’s been estimated that coaching items on the scale of what ChatGPT is hypothesized to run on may just take hundreds of thousands of bucks, only for a unmarried coaching run. Are we able to give a boost to the potency of those coaching strategies, so we will be able to nonetheless get just right items in much less time and for much less cash? We suggest to try this by means of leveraging smaller language items that experience in the past been educated,” says Yoon Kim, an assistant professor in MIT’s Division of Electric Engineering and Pc Science and a member of the Pc Science and Synthetic Intelligence Laboratory (CSAIL).

Reasonably than discarding a prior model of a mannequin, Kim and his collaborators use it because the development blocks for a brand new mannequin. The usage of mechanical device studying, their approach learns to “develop” a bigger mannequin from a smaller mannequin in some way that encodes wisdom the smaller mannequin has already received. This permits quicker coaching of the bigger mannequin.

Their approach saves about 50 p.c of the computational price required to coach a big mannequin, in comparison to strategies that prepare a brand new mannequin from scratch. Plus, the items educated the usage of the MIT approach carried out in addition to, or higher than, items educated with different ways that still use smaller items to permit quicker coaching of bigger items.

Decreasing the time it takes to coach massive items may just assist researchers make developments quicker with much less expense, whilst additionally decreasing the carbon emissions generated throughout the educational procedure. It might additionally permit smaller analysis teams to paintings with those huge items, doubtlessly opening the door to many new advances.

“As we glance to democratize most of these applied sciences, making coaching quicker and more cost effective will change into extra vital,” says Kim, senior writer of a paper in this approach.

Kim and his graduate scholar Lucas Torroba Hennigen wrote the paper with lead writer Peihao Wang, a graduate scholar on the College of Texas at Austin, in addition to others on the MIT-IBM Watson AI Lab and Columbia College. The analysis shall be offered on the Global Convention on Finding out Representations.

The larger the easier

Huge language items like GPT-3, which is on the core of ChatGPT, are constructed the usage of a neural community structure known as a transformer. A neural community, loosely in accordance with the human mind, consists of layers of interconnected nodes, or “neurons.” Each and every neuron accommodates parameters, which can be variables discovered throughout the educational procedure that the neuron makes use of to procedure information.

Transformer architectures are distinctive as a result of, as most of these neural community items get larger, they reach significantly better effects.

“This has ended in an hands race of businesses looking to prepare better and bigger transformers on better and bigger datasets. Extra so than different architectures, it sort of feels that transformer networks get significantly better with scaling. We’re simply now not precisely positive why that is the case,” Kim says.

Those items steadily have loads of hundreds of thousands or billions of learnable parameters. Coaching most of these parameters from scratch is costly, so researchers search to boost up the method.

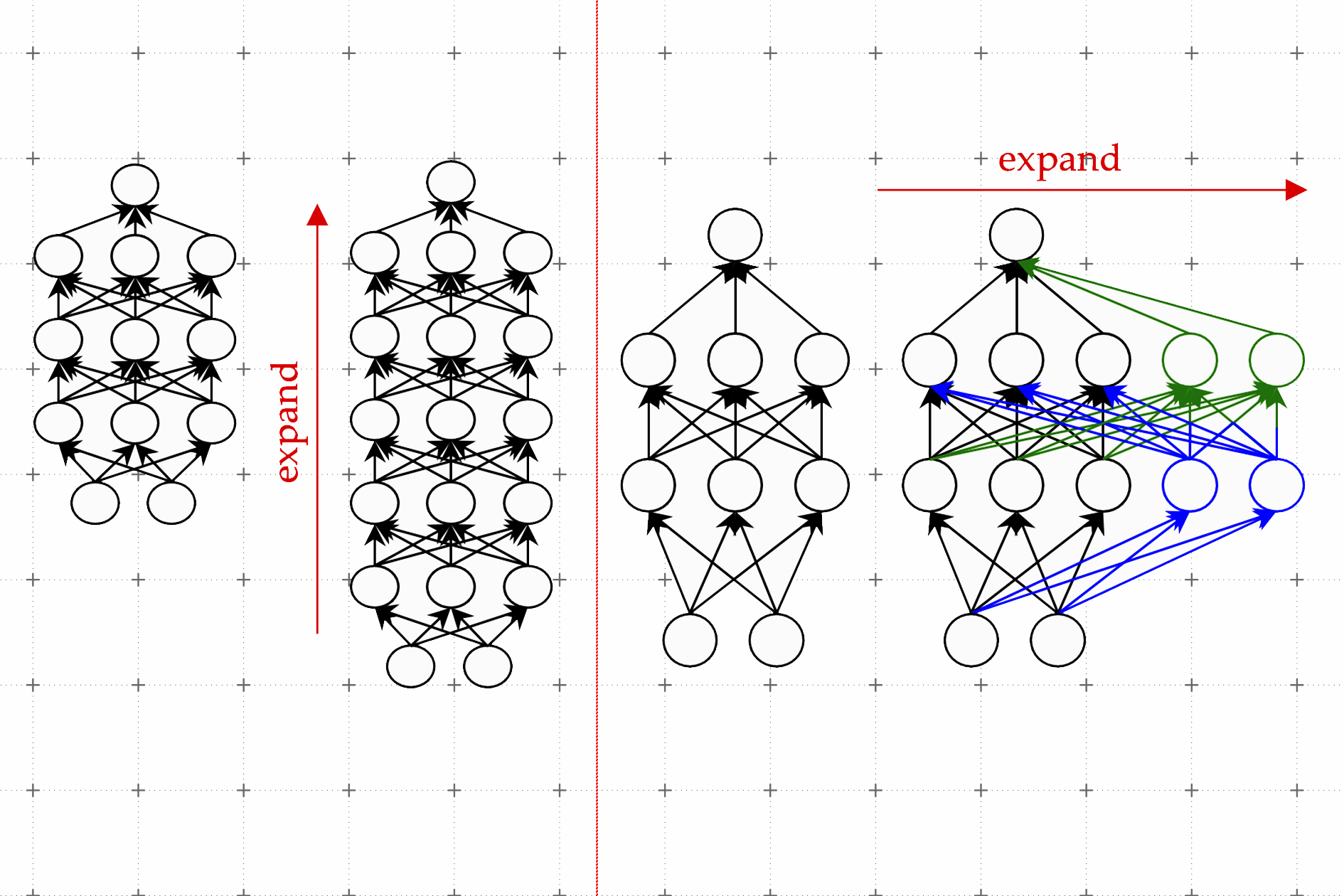

One efficient approach is referred to as mannequin development. The usage of the mannequin development approach, researchers can building up the dimensions of a transformer by means of copying neurons, and even whole layers of a prior model of the community, then stacking them on most sensible. They may be able to make a community wider by means of including new neurons to a layer or make it deeper by means of including further layers of neurons.

By contrast to earlier approaches for mannequin development, parameters related to the brand new neurons within the expanded transformer aren’t simply copies of the smaller community’s parameters, Kim explains. Reasonably, they’re discovered mixtures of the parameters of the smaller mannequin.

Finding out to develop

Kim and his collaborators use mechanical device studying to be told a linear mapping of the parameters of the smaller mannequin. This linear map is a mathematical operation that transforms a suite of enter values, on this case the smaller mannequin’s parameters, to a suite of output values, on this case the parameters of the bigger mannequin.

Their approach, which they name a discovered Linear Expansion Operator (LiGO), learns to extend the width and intensity of bigger community from the parameters of a smaller community in a data-driven method.

However the smaller mannequin might in fact be rather huge — most likely it has 100 million parameters — and researchers may need to make a mannequin with 1000000000 parameters. So the LiGO approach breaks the linear map into smaller items {that a} machine-learning set of rules can take care of.

LiGO additionally expands width and intensity concurrently, which makes it extra environment friendly than different strategies. A person can track how broad and deep they would like the bigger mannequin to be once they enter the smaller mannequin and its parameters, Kim explains.

After they when compared their solution to the method of coaching a brand new mannequin from scratch, in addition to to model-growth strategies, it used to be quicker than the entire baselines. Their approach saves about 50 p.c of the computational prices required to coach each imaginative and prescient and language items, whilst steadily bettering efficiency.

The researchers additionally discovered they might use LiGO to boost up transformer coaching even if they didn’t have get right of entry to to a smaller, pretrained mannequin.

“I used to be shocked by means of how significantly better the entire strategies, together with ours, did in comparison to the random initialization, train-from-scratch baselines.” Kim says.

Sooner or later, Kim and his collaborators are having a look ahead to making use of LiGO to even better items.

The paintings used to be funded, partially, by means of the MIT-IBM Watson AI Lab, Amazon, the IBM Analysis AI {Hardware} Heart, Heart for Computational Innovation at Rensselaer Polytechnic Institute, and the U.S. Military Analysis Place of business.

Supply Through https://information.mit.edu/2023/new-technique-machine-learning-models-0322