In case you are a die-hard Nvidia loyalist, be in a position to pay a fortune to make use of its AI factories within the cloud.

Renting the GPU corporate’s DGX Cloud, which is an all-inclusive AI supercomputer within the cloud, begins at $36,999 in line with example for a month.

The apartment contains get admission to to a cloud pc with 8 Nvidia H100 or A100 GPUs and 640GB of GPU reminiscence. The associated fee contains the AI Endeavor tool to expand AI programs and big language fashions reminiscent of BioNeMo.

“DGX Cloud has its personal pricing style, so shoppers pay Nvidia and they may be able to procure it via any of the cloud marketplaces according to of the site they make a selection to eat it at, nevertheless it’s a carrier this is priced through Nvidia, all inclusive,” stated Manuvir Das, vice chairman for endeavor computing at Nvidia, all the way through a briefing with press.

The DGX Cloud beginning value is with regards to double that of $20,000 charged through Microsoft Azure for a fully-loaded A100 example with 96 CPU cores, 900GB of garage and 8 A100 GPUs per 30 days.

Oracle is internet hosting DGX Cloud infrastructure in its RDMA Supercluster, which scales to 32,000 GPUs. Microsoft will release DGX Cloud subsequent quarter, with Google Cloud’s implementation coming after that.

Consumers must pay a top rate for the most recent {hardware}, however the integration of tool libraries and gear might enchantment to enterprises and knowledge scientists.

Nvidia argues it supplies the most efficient to be had {hardware} for AI. Its GPUs are the cornerstone for high-performance and clinical computing.

However Nvidia’s proprietary {hardware} and tool is like the use of the Apple iPhone – you’re getting the most efficient {hardware}, however as soon as you’re locked in, it’s going to be difficult to get out, and it’s going to value some huge cash in its lifetime.

However paying a top rate for Nvidia’s GPUs may carry long-term advantages. As an example, Microsoft is making an investment in Nvidia {hardware} and tool as it gifts value financial savings and bigger income alternatives via Bing with AI.

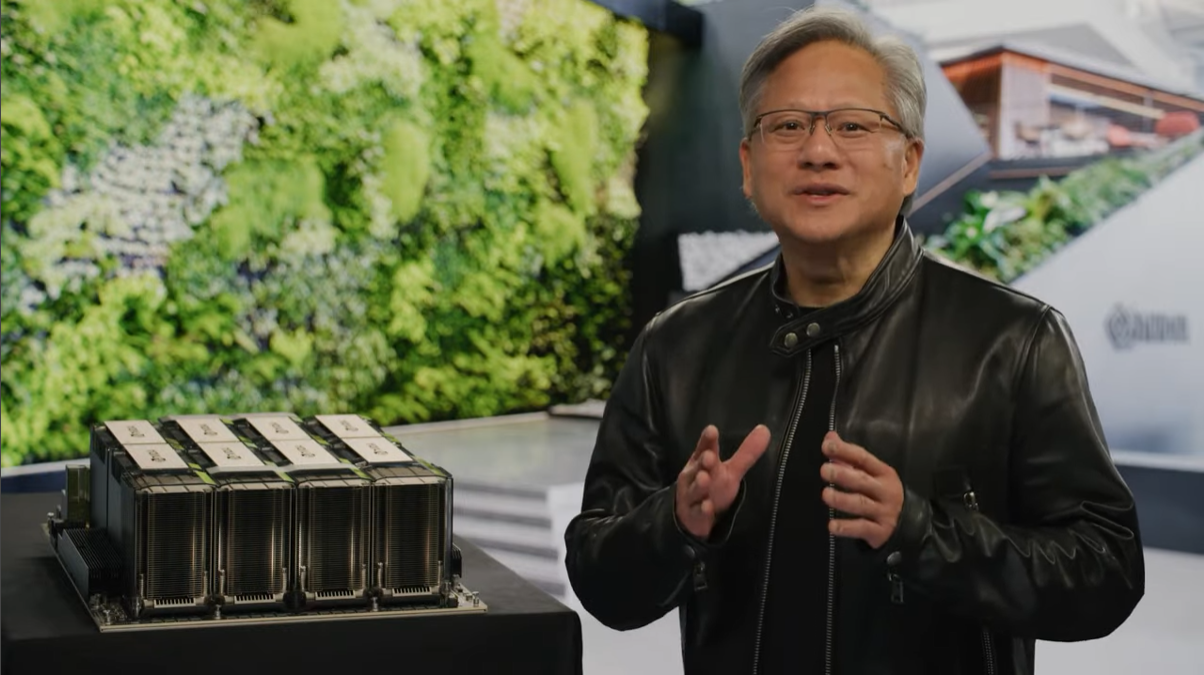

The idea that of an AI manufacturing unit was once floated through CEO Jensen Huang, who envisioned knowledge as uncooked subject material, with the manufacturing unit turning it into usable knowledge or a complicated AI style. Nvidia’s {hardware} and tool are the primary parts of the AI manufacturing unit.

“You simply supply your process, level for your knowledge set and also you hit cross and the entire orchestration and the whole lot beneath is looked after in DGX Cloud. Now the similar style is to be had on infrastructure this is hosted at a lot of public clouds,” stated Manuvir Das, vice chairman for endeavor computing at Nvidia, all the way through a briefing with press.

Thousands and thousands of persons are the use of ChatGPT-style fashions, which require high-end AI {hardware}, Das stated.

DGX Cloud furthers Nvidia’s function to promote its {hardware} and tool as a collection. Nvidia’s is transferring into the tool subscription industry, which has a protracted tail that comes to promoting extra {hardware} so it will probably generate extra tool income.

A tool interface, the Base Command Platform, will permit firms to regulate and track DGX Cloud coaching workloads.

The Oracle Cloud has clusters of as much as 512 Nvidia GPUs, with a 200 gigabits-per-second RDMA community. The infrastructure helps a couple of record methods together with Lustre and has 2 terabytes in line with moment throughput.

Nvidia additionally introduced that extra firms had followed its H100 GPU. Amazon is pronouncing their EC2 “UltraClusters” with P5 cases, which will probably be according to the H100.

“Those cases can scale as much as 20,000 GPUs the use of their EFA era,” stated Ian Dollar, vice chairman of hyperscale and HPC computing at Nvidia all the way through the clicking briefing.

The EFA era refers to Elastic Cloth Adapter, which is a networking implementation orchestrated through Nitro, which is an all-purpose customized chip that handles networking, safety and knowledge processing.

Meta Platforms has begun the deployment of H100 methods in Grand Teton, the platform for the social media corporate’s subsequent AI supercomputer.

Supply By way of https://www.hpcwire.com/2023/03/21/nvidias-ai-factory-services-start-at-37000/