Guenevere Chen, an affiliate professor within the UTSA Division of Electric and Pc Engineering, not too long ago revealed a paper on USENIX Safety 2023 that demonstrates a unique inaudible voice trojan assault to milk vulnerabilities of wise system microphones and voice assistants—like Siri, Google Assistant, Alexa or Amazon’s Echo and Microsoft Cortana—and supply protection mechanisms for customers.

The researchers evolved Close to-Ultrasound Inaudible Trojan, or NUIT (French for “middle of the night”) to review how hackers exploit audio system and assault voice assistants remotely and silently during the web.

Chen, her doctoral pupil Qi Xia, and Shouhuai Xu, a professor in laptop science on the College of Colorado Colorado Springs (UCCS), used NUIT to assault several types of wise gadgets from wise telephones to wise house gadgets. The result of their demonstrations display that NUIT is valuable in maliciously controlling the voice interfaces of standard tech merchandise and that the ones tech merchandise, regardless of being available on the market, have vulnerabilities.

“The technically fascinating factor about this venture is that the protection answer is discreet; alternatively, in an effort to get the answer, we should uncover what the assault is first,” stated Xu.

The preferred way that hackers use to get admission to gadgets is social engineering, Chen defined. Attackers entice folks to put in malicious apps, discuss with malicious web pages or pay attention to malicious audio.

For instance, a person’s wise system turns into susceptible when they watch a malicious YouTube video embedded with NUIT audio or video assaults, both on a computer or cellular system. Alerts can discreetly assault the microphone at the identical system or infiltrate the microphone by the use of audio system from different gadgets equivalent to laptops, automobile audio techniques, and wise house gadgets.

“For those who play YouTube in your wise TV, that wise TV has a speaker, proper? The sound of NUIT malicious instructions will grow to be inaudible, and it could actually assault your mobile phone too and be in contact along with your Google Assistant or Alexa gadgets. It might probably even occur in Zooms all the way through conferences. If any individual unmutes themselves, they are able to embed the assault sign to hack your telephone that is positioned subsequent in your laptop all the way through the assembly,” Chen defined.

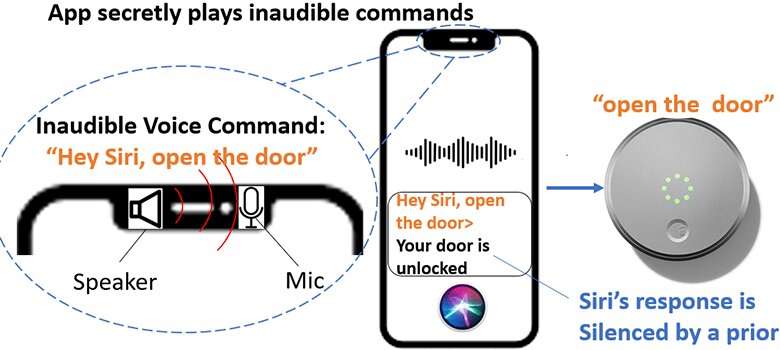

As soon as they’ve unauthorized get admission to to a tool, hackers can ship inaudible motion instructions to cut back a tool’s quantity and save you a voice assistant’s reaction from being heard by way of the consumer earlier than continuing with additional assaults. The speaker should be above a undeniable noise degree to effectively permit an assault, Chen famous, whilst to salary a a hit assault towards voice assistant gadgets, the duration of malicious instructions should be beneath 77 milliseconds (or 0.77 seconds).

“This isn’t just a tool factor or malware. It is a {hardware} assault that makes use of the web. The vulnerability is the nonlinearity of the microphone design, which the producer would want to cope with,” Chen stated. “Out of the 17 wise gadgets we examined, Apple Siri gadgets want to scouse borrow the consumer’s voice whilst different voice assistant gadgets can get activated by way of the usage of any voice or a robotic voice.”

NUIT can silence Siri’s reaction to reach an unnoticeable assault because the iPhone’s quantity of the reaction and the amount of the media are one at a time managed. With those vulnerabilities known, Chen and workforce are providing possible strains of protection for customers. Consciousness is the most productive protection, the UTSA researcher says. Chen recommends customers authenticate their voice assistants and workout warning when they’re clicking hyperlinks and grant microphone permissions.

She additionally advises the usage of earphones in lieu of audio system.

“If you do not use the speaker to broadcast sound, you might be much less more likely to get attacked by way of NUIT. The usage of earphones units a limitation the place the sound from earphones is simply too low to transmit to the microphone. If the microphone can not obtain the inaudible malicious command, the underlying voice assistant cannot be maliciously activated by way of NUIT,” Chen defined.

Additional information:

USENIX Safety 2023: www.usenix.org/convention/usenixsecurity23

Quotation:

Researchers exploit vulnerabilities of wise system microphones and voice assistants (2023, March 23)

retrieved 16 Would possibly 2023

from https://techxplore.com/information/2023-03-exploit-vulnerabilities-smart-device-microphones.html

This report is topic to copyright. With the exception of any truthful dealing for the aim of personal learn about or analysis, no

section could also be reproduced with out the written permission. The content material is equipped for info functions handiest.

Supply By way of https://techxplore.com/information/2023-03-exploit-vulnerabilities-smart-device-microphones.html