A crew of pc science researchers with participants from Google, ETH Zurich, NVIDIA and Tough Intelligence, is highlighting two sorts of dataset poisoning assaults that may be utilized by dangerous actors to deprave AI device outcomes. The crowd has written a paper outlining the sorts of assaults that they’ve recognized and feature posted it at the arXiv preprint server.

With the advance of deep studying neural networks, synthetic intelligence programs have transform large information. And as a result of their distinctive studying skills they may be able to be implemented in all kinds of environments. However, because the researchers in this new effort be aware, something all of them have in commonplace is the will for high quality information to make use of for coaching functions.

As a result of such methods be told from what they see, in the event that they occur throughout one thing this is improper, they’ve no means of realizing it, and thus incorporate it into their algorithm. For instance, believe an AI device this is educated to acknowledge patterns on a mammogram as cancerous tumors. Such methods can be educated by way of appearing them many examples of actual tumors accrued all the way through mammograms.

However what occurs if somebody inserts photographs into the dataset appearing cancerous tumors, however they’re classified as non-cancerous? Very quickly the device would start lacking the ones tumors as a result of it’s been taught to look them as non-cancerous. On this new effort, the analysis crew has proven that one thing an identical can occur with AI methods which might be educated the usage of publicly to be had information at the Web.

The researchers started by way of noting that possession of URLs at the Web regularly expire—together with the ones which have been used as assets by way of AI methods. That leaves them in the stores by way of nefarious sorts taking a look to disrupt AI methods. If such URLs are bought and are then used to create web pages with false data, the AI device will upload that data to its wisdom financial institution simply as simply as it’ll true data—and that may result in the AI device generating much less then fascinating outcomes.

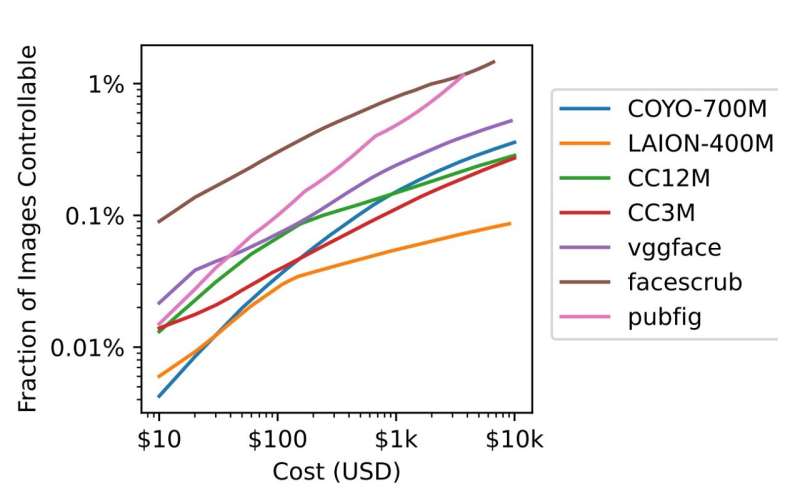

The analysis crew calls this kind of assault cut up view poisoning. Trying out confirmed that such an method may well be used to buy sufficient URLs to poison a big portion of mainstream AI methods, for as low as $10,000.

There may be otherwise that AI methods may well be subverted—by way of manipulating information in widely known information repositories similar to Wikipedia. This may well be performed, the researchers be aware, by way of editing information simply previous to common information dumps, combating displays from recognizing the adjustments prior to they’re despatched to and utilized by AI methods. They name this method frontrunning poisoning.

Additional information:

Nicholas Carlini et al, Poisoning Internet-Scale Coaching Datasets is Sensible, arXiv (2023). DOI: 10.48550/arxiv.2302.10149

© 2023 Science X Community

Quotation:

Two sorts of dataset poisoning assaults that may corrupt AI device outcomes (2023, March 7)

retrieved 4 April 2023

from https://techxplore.com/information/2023-03-dataset-poisoning-corrupt-ai-results.html

This file is topic to copyright. Except for any honest dealing for the aim of personal learn about or analysis, no

section is also reproduced with out the written permission. The content material is equipped for info functions handiest.

Supply Via https://techxplore.com/information/2023-03-dataset-poisoning-corrupt-ai-results.html